Badly designed surveys don’t promote sustainability, they harm it

Brands love reported data that shows people care about sustainable consumption, but these spurious findings just hold back real behaviour change.

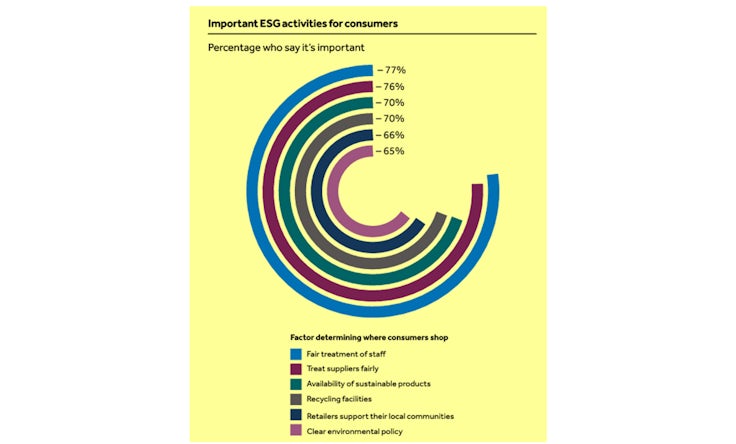

At some point last week, an ad from Barclays reminded me that 70% of consumers think sustainability is important in determining where they shop. I harumphed. Loud enough for the woman at the next table with a small dog to give me a hard stare. A Google search later and I found the source: a Barclays Corporate Banking report from last year entitled ‘What’s in store in retail’.

It was a report conducted by Censuswide for Barclays and built from a survey of 600 senior retailers and 2,000 British consumers. Given the report does not attempt to slice or dice its sample into smaller segments, that’s more than enough people for the results to pass the standard tests of confidence and prove extrapolatable to the managerial and consumer populations it reports on.

So why harumph?

It was the question that was asked to get this 70% number. Consumers were presented with six different environmental, social and governance (ESG) factors and asked which they felt were important in determining where they shop. The results portray UK shoppers as remarkably attuned to all the various ESG issues. Two-thirds of the population are driven to shop at locations because of all these factors. Several of them – treatment of staff, treatment of suppliers – represent a driving factor for three-quarters of British shoppers. Wow!

The results do seem a little at odds with the reality of high street shopping. If the fair treatment of staff is such a driver for purchase how come Amazon ‘we time your piss breaks’ UK Ltd is one of the most popular retailers in the country? If supplier treatment is also top of the list, how come Tesco – ‘we squeeze suppliers to keep prices down’ – is so huge? And if support for local communities is so incredibly strong why does US private equity-owned Morrisons do so well? Based on this data, shouldn’t most British shoppers be avoiding all these top 10 retailers and shopping at the Co-op and Oxfam instead?

The importance of survey design

One of the most important lessons of market research is to use qualitative data to identify the variables and, only then, use quantitative surveys to measure the outcomes. Qual on its own is weak and fluffy. Quant on its own is precisely wrong. So qual must drive quant. Otherwise, you measure the importance of A, B and C when X, Y and Z were also relevant and far more important to a consumer who does not share your agenda or worldview.

But that’s a problem for agenda-based research like the Barclays survey, because if the qual drives the quant, suddenly it’s not about whether you support the things we want you to think are important, like the environment or communities. Instead, it becomes a proper study of what really drives consumer choice, and that might mean what we think is important will not match with the only reality that matters: that of the consumer. If respondents had been asked to inductively describe why they shop where they shop without the pre-existing list of ESG drivers, would two-thirds of them still have mentioned recycling or the treatment of suppliers? Of course not.

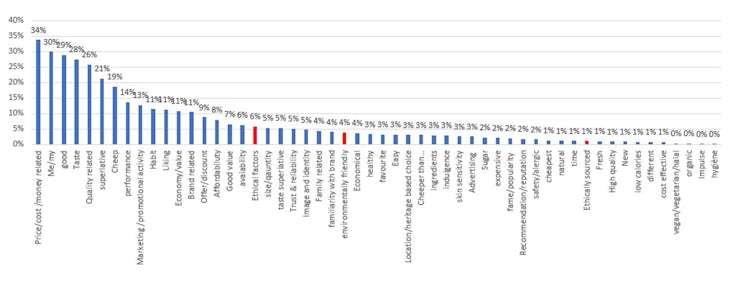

And there is evidence to support my cynicism. Research firm Kantar asks 100,000 people each year to list products they have recently purchased. Then, rather than introducing and measuring drivers for these choices, researchers explore verbatims from each consumer who describes why they bought what they bought. These verbatims are then coded and their frequency measured across the total sample.

Done this way – inductively and contextualised with all the grounded drivers of real purchase – a very different picture emerges. Environmental issues are mentioned by only 4% of the market. Ethical issues (6%) and sourcing (1%) drop to a fraction of the proportions suggested by Barclays. The other ESG factors relating to staff, suppliers and local communities disappear completely from consumer decision-making. Indeed, all six of Barclays’ ESG factors now have the same combined importance for consumers as ‘habit’. And that’s just for beauty purchases. The importance of ESG drivers slips down even further for other categories like alcohol, mobile and snacking.

And there is a second, even bigger issue with asking consumers to report on these kinds of ESG factors. The natural tendency is to over-report their importance because of social desirability bias. You know the story (it’s a bit of blind spot for me); the desire not to look like a wanker in front of strangers.

I have spent hours, and I mean hours, debating this single topic with Mary Kyriakidi from Kantar. We have dreamed of running away from our day jobs to work on a ‘Mary/Mark Coefficient’. It would essentially be a bullshit detector but Mary is too polite to call it this. We would take claimed data like Barclays’ and apply a reductive equation based on survey topic, sample demography and questionnaire format thus reducing the original BS PR claimed number down to a more accurate base. Barclays claims 70% of consumers choose a retailer because of the availability of sustainable products… beep… beep… beep… becomes 12% once you apply the Mary/Mark Coefficient.

One of the most important lessons of market research is to use qualitative data to isolate the variables and, only then, use quantitative surveys to measure the outcomes.

And 12% would not be a bad guess, either. The old David Ogilvy quote, stolen from Margaret Mead, is as relevant and powerful today as ever. “People”, the great ad man once opined, “don’t think what they feel, don’t say what they think and don’t do what they say”. We concur. Indeed, we might improve the old legend’s quote with the addendum “especially when it comes to ESG stuff”.

Mary has data showing all kinds of shortfalls when you compare what people say they do when it comes to ESG and what they actually do. When you compare accurate behavioural data derived from secondary data or panel information with what comes out in surveys from the very same people, the results can be eye-opening. She can show you that 58% of the 46% of the British population who say they avoid buying fast-fashion products bought something from a fast-fashion retailer last year, for example. These people aren’t necessarily lying. I mean, some of them are. But others are forgetful. Indulgent. Ignorant. Embarrassed. Unreliable. Boastful. They are people, after all.

Until, of course, marketers turn them into bar charts and start assuming their inaccurate responses are data points accurate to the 95th percentile.

‘You can’t do your job without it’: Why protecting research budgets matters

Techniques for more reliable responses

One simple but usually ignored fix is to employ honesty priming and a more human tone of voice ahead of any questions that invoke social signalling or personal shame. If you ask consumers in the portentous booming style of most surveys, ‘Are you up to date with your pet’s vaccinations?’, a whopping 96% of British consumers will say yes. Vaccinations are one of the reasons for this answer. But so is shame, inattention and bad survey design.

If, however, you start with a disclaimer that the consumer will now be asked about a series of common tasks that people often put off because of time, effort, cost or forgetfulness, then ask, ‘Have you taken your dog or cat to get its latest vaccinations?’ with the options ‘Done this task’, ‘That reminds me, I need to do this’ or ‘N/A, this task does not apply to me’, the number of people claiming to be up to date drops down to 62%.

That’s a difference of 34 percentage points. A third of the market. That’s not just a rounding error. That’s the difference between there being no need to do anything and the potential to generate millions of pounds with a reminder campaign. Question design matters. Not just because it produces very different answers but because these answers result in very different marketing outcomes.

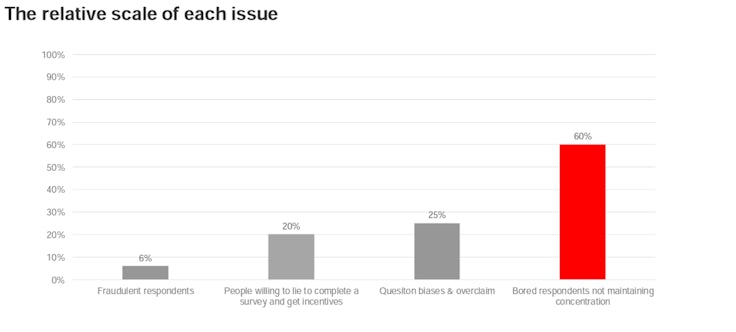

The guru on this kind of fallibility is Kantar’s Jon Puleston. He has made a career of identifying these errors and then helping clients avoid them with innovation and expertise. One of his many insights is the relative scale of different issues that can and will skew data away from accuracy, shown above. Fraud does play a role and we tend to talk about it the most. But question bias and overclaim are significantly more distorting.

But Puletson’s biggest point is the big bastard red column over to the right of the chart above. Towering above fraud, bad questions, bias and social overclaim is simple boredom. As marketers add more questions to their surveys, the likelihood for potential bingo-based answering goes through the roof. We’ve all done it. As the dropdown boxes pile up, as the questions inch into triple figures, we start to provide arbitrary, random answers and the statistically significant data becomes empirically worthless. Only research companies and marketers think anyone (assuming the absence of human/electrical induction methods) is going to concentrate on more than 30 questions about anything.

‘The 101 of research’: Are brands using quant correctly?

Perils of claimed behaviour

To be fair to Censuswide, maybe it was on top of all these issues when it conducted the Barclays research. Maybe it used a panel that removes fraudulent respondents and screens for fraudulent answers. Perhaps it employed honesty priming throughout the survey and posed questions in a more grounded, authentic tone. And maybe its questionnaire was in and out in 20 questions and avoided respondent exhaustion.

But it’s still a bad survey because, while it asks a question that consumers can answer, it asks a question that consumers don’t know the answer to. And there is a difference. And marketers are meant to know that. And that was the source of my weekend harumph.

Consumers don’t know what drives their behaviour. It’s a dumb question to which you will get an entirely plausible answer. But one that has zero validity. Questionnaires are great at getting basic data from a consumer. Do you own a dog? How many dogs? How old is your dog? I also believe questionnaires are good at accessing consumer thoughts and feelings about things. How much does a consumer agree that vaccinations are important? How easy is it to vaccinate your dog?

But survey questions fail when they ask consumers to explain their behaviour or atomise it into a convenient list of ranked drivers. Which of these factors explain why you vaccinated your dog? Is brand of vaccine important in your choice? What marketing tools are the most effective when choosing dog products? Rank from 1 to 7 in descending order the most important attributes of a superior dog vaccine. If your dog became ill and you were living with a friend who did not have a car, which other options of transport, in order, would you use to get to the vet assuming normal rates of traffic?

Consumers don’t know what drives their behaviour. It’s a dumb question to which you will get an entirely plausible answer. But one that has zero validity.

At this point, the value of reported data becomes almost worthless. We need to move into the more complex realm of derived data. Research that links brand preferences to brand perceptions and explores the impact of perceived brand attributes on the likelihood of actual preference and purchase. Or which offers up alternative choices and observes which option the consumer chooses and imputes why. Or which goes the whole hog and offers an alternative option in a marketing experiment that compares sales to a control offering online or in the actual real world of the market.

It’s not good enough to ask consumers if the availability of sustainable products influences where they shop. Partly because they will give you a false answer to impress you. Partly because it depends on the other trade-offs that they might have to make. Partly because by question 42 you might as well be asking them in Klingon. But mostly because they simply don’t know the degree to which it influences them. And they don’t know that they don’t know.

If we did some qualitative research on consumers to understand what the main factors were in driving choice of store, then we dumped these attributes into a conjoint study in which they were traded off against price, there is no way that this more appropriate method would reveal anything like this level of importance for ESG factors. Some of them would not appear on the radar at all.

‘A numbers game’: What does qualitative research look like in 2023?

Harming the ESG agenda

I’m not having a go at Barclays or Censuswide. Well, obviously I am. But I don’t want to single them out. So many marketers do this that I could literally have picked a different example every week for the next 12 months to feature. We all make this mistake. Kantar has made this mistake. I have made this mistake. You too – yes, you. And we should all draw the line between the data that can and cannot be trusted from simple survey data.

That’s partly because it’s just plain bad practice. Partly because it leads to some highly vulnerable marketing executions. But also, because we keep peppering the business world with over-optimistic, highly specious horseshit about how consumers think and act.

Allegedly, 49% of consumers depend on influencers for product recommendations and 74% of consumers use social media to help them make purchasing decisions. Meanwhile, 82% of consumers think that a brand’s values needs to align with their own before they purchase it and 83% of US consumers think companies should be actively shaping ESG best practices. Amazing! Especially considering another poll completed this month confirms that 78% of Americans don’t know what the acronym ESG even means.

Reporting all these false positives from inappropriate research designs about the importance of ESG and sustainability does not help the cause, it hinders it. Over the next decade, all of us in marketing and the broader community face an existential challenge to stop the destruction of our planet from a dependence on fossils fuels. If we keep reporting erroneous findings like ‘sustainable products are a factor for 70% of consumers’, we might, entirely understandably but completely incorrectly, conclude that change is coming and a consumer-led revolution is under way. We might start thinking that marketing and advertising can save the world.

Nothing could be further from the truth. Consumers will keep buying too much, too often made from single-use plastic and other unmentionables, as they drive around in their fossil-fuelled cars (whether they have a petrol or electric powertrain) in a hopeless quest for personal and social redemption. Consumers are selfish, fucked-up creatures who only sound like they care about the planet, others and global equality in the surveys they occasionally take. As soon as the clipboard closes, the credit cards come back out. Selfish, unthinking behaviour trumps stated, altruistic intention every time. And twice on Saturdays.

Lazy surveys make it look like people care. But the high street tells a completely different story. How many more years are we going to accept the plaintive, entirely bogus claims of Gen Y – dressed head to toe in fast fashion – as they tell us that they won’t buy anything made by non-union labour using non-environmentally sourced materials working at unethical organisations that do not reflect their values?

The sooner we wake up to bad research design and the impact it is having on a false global consciousness, the sooner we will appreciate the remarkable absence of consumer agency in saving the planet. And the quicker we can come to that conclusion that we need to utilise approaches to climate disaster and the general fucked-upness of things that are more more stringent, effective and ultimately hopeful than marketing.

I know that 88% of you agree with me.

Mark Ritson teaches the Mini MBA in Marketing, which 95% of alumni said made them a more effective marketer. Whether this was a reason for students signing up is something he and his surveys cannot possibly estimate.